Enterprise LLM Resource Scheduler

Solving the critical challenges of LLM integration with Google Cloud-powered infrastructure

The Challenges Businesses Face

With the rapid development of AI technology, more enterprises are integrating large language models into core business processes. However, real-world technical challenges are often more complex than expected:

- API call frequency limits causing business interruptions

- Significant price differences between model providers

- Uneven service quality affecting user experience

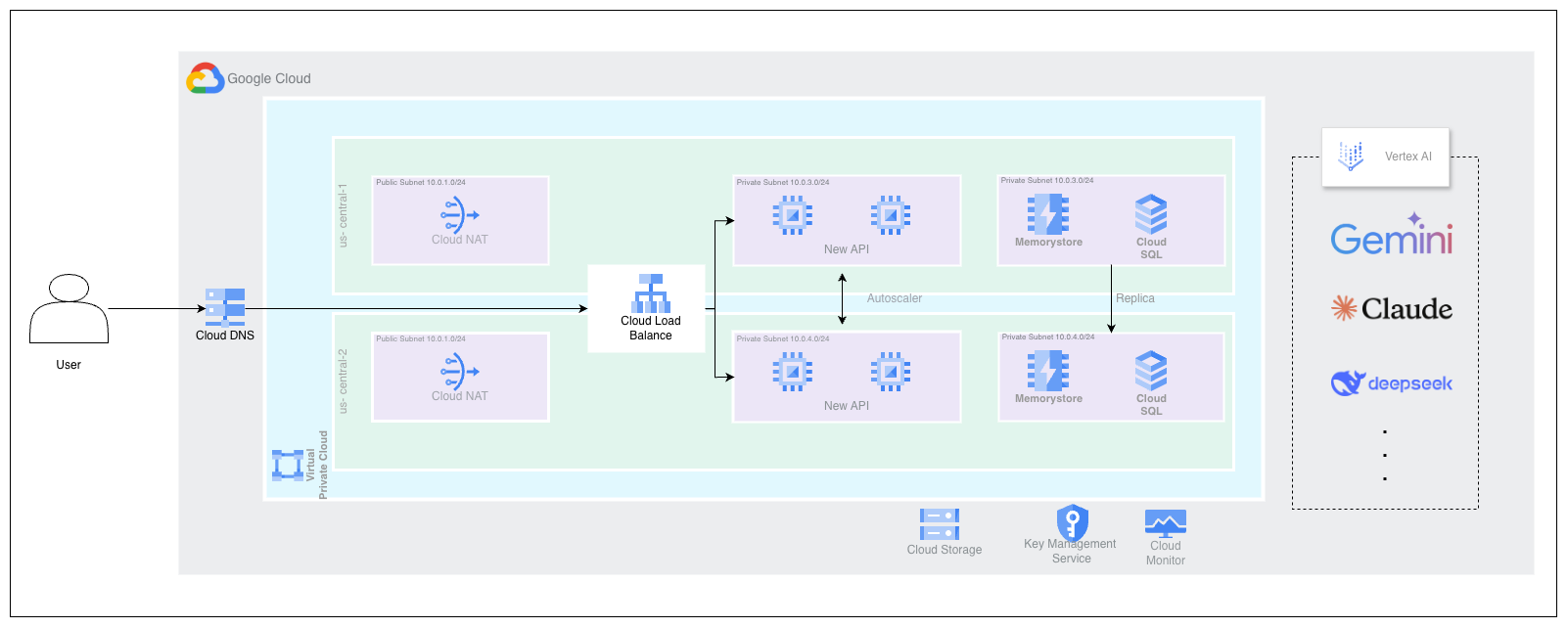

Technical Architecture on Google Cloud

LLM Resource Scheduler Solution

Our Google Cloud-Powered Solution

Our system leverages the advantages of the Google Cloud ecosystem to deliver a reliable, cost-effective LLM management platform:

Cloud Load Balancer

Intelligent traffic distribution between multiple models, selecting the optimal processing path based on real-time conditions

Autoscaler

Elastic scaling capabilities that automatically adjust resource scale based on load to ensure stable service during peak periods

Cloud Storage

Stores model configurations, log data, and cached content with a highly available data persistence solution

Key Management Service

Secure management of multi-project API keys and sensitive configurations

Cloud Monitor

Comprehensive performance monitoring and alerting mechanisms to track system health and provider response status

Memorystore

High-performance caching layer that improves response speed and reduces API call costs